2.7 Scraping Data

We have discussed a variety of ways that we can obtain and manipulate archaeological data. These have depended on the owners or controllers of that data to make positive choices in how that data is structured and exposed to the web. But often, we will encounter situations in which we want to use data that has been made available in ways that don’t allow for easy extraction. The Atlas of Roman Pottery for instance is a valuable resource - but what if you wanted to remix it with other data sources? What if you wanted to create a handy offline reference chart for just the particular wares likely to be found on your site? It’s not immediately clear, from that website, that such a use is permitted, but it’s easy to imagine someone doing such a thing. After all, it is not uncommon on a site to have well-thumbed stacks of photocopies of various reference works on hand (which also likely is a copyright violation).

In this gray area we find the techniques of web scraping. Every web page you visit is downloaded locally to your browser; it is your browser that renders the information so that we can read the page. Scraping involves freeing data of interest from the browser into a format that is useable for your particular problem (often to a txt or csv file). This can be done manually via copying and pasting, but automated techniques are more thorough, less error-prone, and more complete. There are plugins for browsers that will semi-automate this for you; one commonly used plugin for Chrome is Scraper. When you have Scraper installed, you can right-click on the information you’re interested, and select ‘scrape similar’. Scraper is examining the underlying code that generates the website, and is extracting all of the information that appears in a similar location in the ‘document object model’. An excellent walkthrough of the technical and legal issues involved in using Scraper is covered in this Library Carpentry workshop, and is well worth your time. Another excellent tutorial to writing your own scraper that works in a similar vein (identifying patterns in how the site is built, and pulling the data that sits inside those patterns) is available on the Programming Historian.

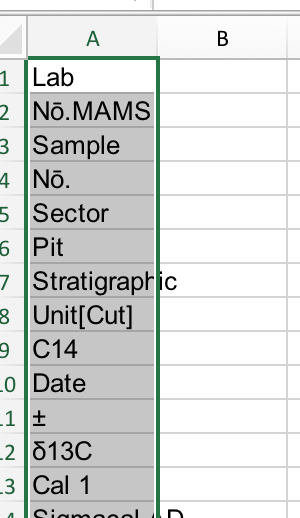

Other times, we wish to reuse or incorporate the published data from an archaeological study in order to complement or contrast with our own work. For instance, in Lane, K., Pomeroy, E. & Davila, M. (2018). Over Rock and Under Stone: Carved Rocks and Subterranean Burials at Kipia, Ancash, AD 1000 – 1532. Open Archaeology, 4(1), pp. 299-321. doi:10.1515/opar-2018-0018 there are a number of tables of data. Their Table 1 for instance is a list of calibrated dates. How can we integrate these into our own studies? In many journal pdfs, there is a hidden text layer over top of the image of the laid out and typeset page (pdfs made from journal articles from the mid 1990s or earlier are typically merely images and do not contain this hidden layer). If you can highlight the text, and copy and paste it, then you’ve grabbed this hidden layer. But let’s do that with table one - this is what we end up with:

Lab Nō.MAMSSample Nō.SectorPitStratigraphic Unit[Cut]C14 Date±13C Cal 1 Sigmacal ADCal 2 Sigmacal AD15861Ca-8B234 [22]48922-21,31421-14381412-144415862Ca-21A32846923-24,21428-14441416-144915863Ca-22A328 [48]97622-18,71021-11461016-115315864Ca-23A33439423-20,21448-16071442-161815865Ca-25B272 [71]80222-19,01222-12561193-127215866Ca-27B41335818-17,11472-16181459-163015867Ca-28B6 (RF4)20 [19]48219-27,91424-14391415-144415868Ca-29B41335920-17,91470-16191456-1631TNot entirely useful. If you paste directly into a spreadsheet, you end up with something even worse:

Pasting Lane et al table 1 into excel

The answer in this case is a particular tool called ‘Tabula’. This tool allows you to define the area on the page where the data of interest is located; it then tries to understand how the text is laid out (especially with regard to white space) and enable you to paste into a spreadsheet or document cleanly (assuming that the pdf has that hidden text layer). In the Jupyter Notebook for this section we have an R script that calls Tabula, and then passes the result into a dataframe enabling you to immediately begin to work with the data. To run this script, launch the binder and then click ‘new -> RStudio session’. Within RStudio, click on ‘file - open’ and open ‘Extracting data from pdfs using Tabulizr.R’. This script is commented out explaining what each line achieves; you run each line from top to bottom by pressing the ‘run’ button (or using the keyboard shortcut).

This script works well with tabular data; but what if we wanted to recover information or data from other kinds of charts or diagrams? This is where Daniel Noble’s ‘metaDigitise’ package for R comes into play. This package enables you to bring an image of the graph or plot into rStudio, and then trace over things like scale bars and axes to enable the package to work out the actual values the original graph plotted! The results are then available for further manipulation or statistical analysis. Since so many archaeological studies do not publish their source data (perhaps these studies were not written at a time when reproducibility or replicability were as feasible as they are today) this tool is an important element of a digital archaeological approach to pulling data together. In the binder launched above (link here there is a script called ‘metaDigitise-example.R’. Open that within rStudio and work through its steps to get a sense of what can be accomplished with it.

A full walk through of this package (which is conceived of as being useful for meta-analyses) see the original documentation.

Finally, there is the extremely powerful Scrapy framework for python that allows you to build a custom scraper for use on a wide variety of sites. We also include a walk through of the this tutorial that builds a scraper from scratch on Finds.org.uk data.

2.7.1 Exercises

- Complete the Scrapy walkthrough. Then, try again from start on another museum’s collection page (for instance, these search results from the Canadian Museum of History). What do you notice about the way the data is laid out? Where are the ‘gotchas’? Examine the terms of service for both sites. What ethical or legal issues might scraping present? To what degree should museums be presenting data for both human and machines to read? Is there an argument for not doing this?

- Read Christen (2012) and consider the issues of access to digital cultural heritage from this viewpoint. Where should digital archaeology intersect?

References

Christen, Kimberly A. 2012. “Does Information Really Want to Be Free? Indigenous Knowledge Systems and the Question of Openness.” International Journal of Communication 6. http://ijoc.org/index.php/ijoc/article/view/1618.